Digital Make up Face Generation

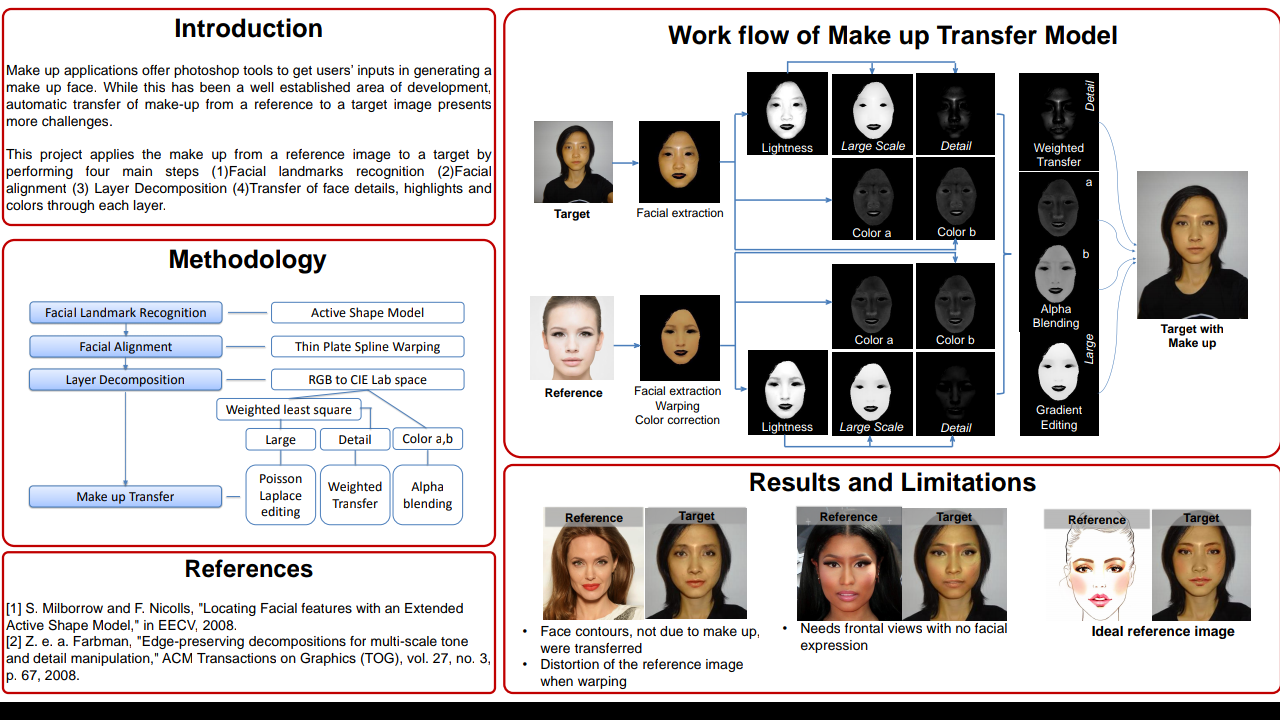

Digital Make up Face Generation Goal Current make-up applications rely on using photoshop tools to apply makeup on the target's digital faces and generate results. While these applications allow customization, a customer who wants to quickly decide on the type of makeup kit to buy at a store will not find it useful. The customer might just want to find out how the make-up look on the cover or billboard will look like on her face. The goal of this project is to use an existing reference image of another subject with a make-up applied, and transfer the reference's make up on the target's face. The application can be further extended to photo retouching and illumination transfer from the reference image to the target. Methodology In order to transfer the make-up from the reference onto target in a pixel by pixel basis, the areas of interest must align. Face features such as eyes, nose, mouth and contours of the face, will be recogniz...