Digital Make up Face Generation

Digital Make up Face Generation

Goal

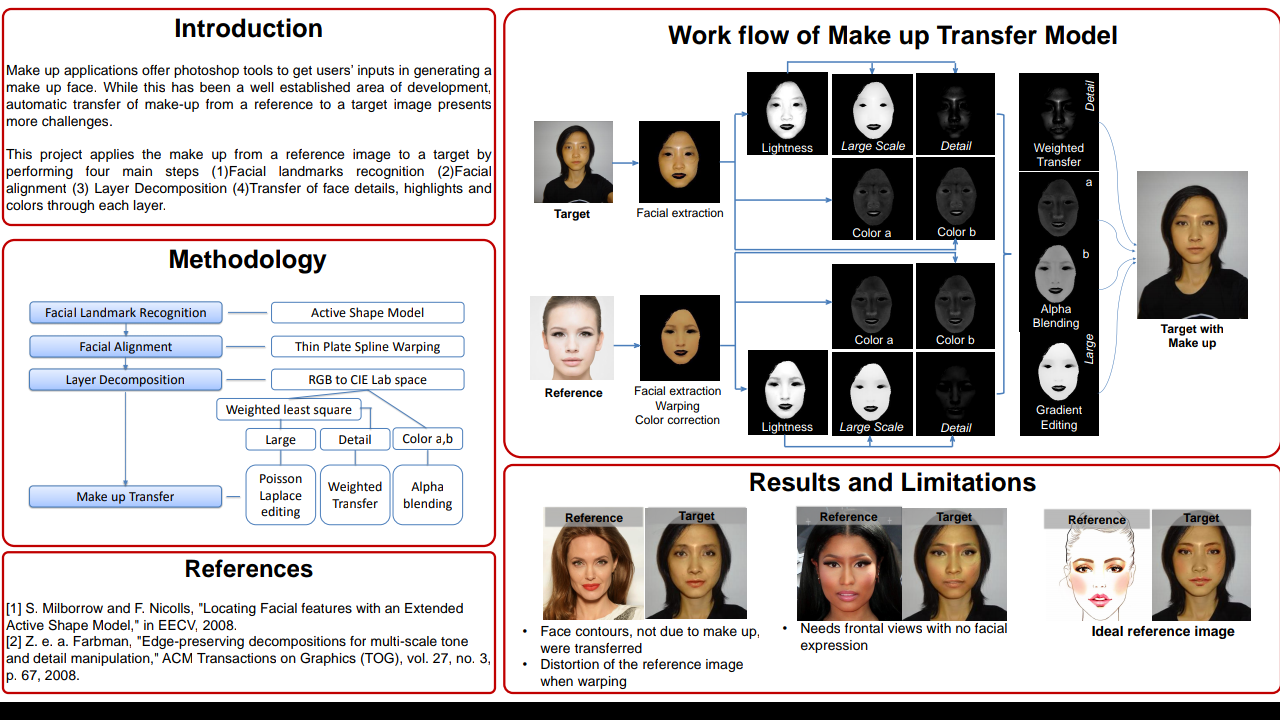

Current make-up applications rely on using photoshop tools to apply makeup on the target's digital faces and generate results. While these applications allow customization, a customer who wants to quickly decide on the type of makeup kit to buy at a store will not find it useful. The customer might just want to find out how the make-up look on the cover or billboard will look like on her face. The goal of this project is to use an existing reference image of another subject with a make-up applied, and transfer the reference's make up on the target's face. The application can be further extended to photo retouching and illumination transfer from the reference image to the target.

Methodology

In order to transfer the make-up from the reference onto target in a pixel by pixel basis, the areas of interest must align. Face features such as eyes, nose, mouth and contours of the face, will be recognized using Active Shape Model (ASM) [1]. The features will then be aligned using Thin Plate Spline warping as proposed in [5]. Once the images are aligned, they will decomposed into lightness layer and color layer in CIELab color space by using weighted least square (WLS) filter[2]. The lightness layer will be further decomposed into large scale layer which include the largest changes in highlights due to make up and small scale layer which is not part of applied makeup areas. The transfer method of make up in the large scale layer will be transferred using gradient based methods [3]. Small scale layer of the target can be simply added in the final result. Finally, the color layer can be transferred through alpha blending.

It will be assumed that both the reference and the target have similar type of lighting conditions. This ensures that all the highlights are due to make up. Both images will face straight and upright with eyes open and mouths closed so that algorithms on Active Shape Model can be used successfully.

References

[1] S. Milborrow and F. Nicolls, "Locating Facial features with an Extended Active Shape Model," in EECV, 2008.

[2] Z. e. a. Farbman, "Edge-preserving decompositions for multi-scale tone and detail

manipulation," ACM Transactions on Graphics (TOG), vol. 27, no. 3, p. 67, 2008.

[3] X. Chen and e. al., "Face illumination transfer through edge-preserving filters," in Computer Vision and Pattern Recognition (CVPR), 2011.

DOWNLOAD SOURCE CODE CLICK HERE

Matlab R2017 Crack nice work best post i like it

ReplyDelete