Detection of flood-survivors through IR imagery on an autonomous drone platform

Detection of flood-survivors through IR imagery on an autonomous drone platform

OUTPUT

PROJECT PROPOSAL

Objective

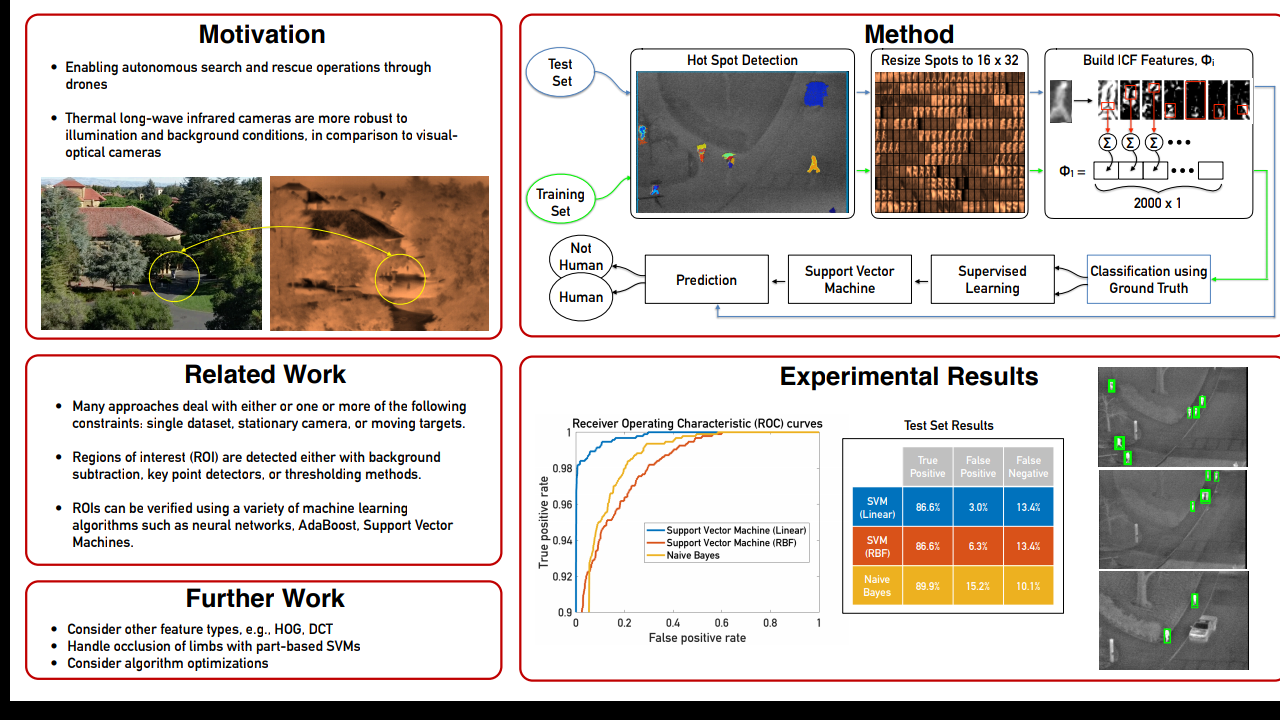

The goal is to detect human survivors in infra-red and visual imagery to enable autonomous search and rescueoperations through drones.

Goals

1. Demonstrate a proof-of-concept of the algorithms in MATLAB through recorded video sequences

2. Hardware integration and validation of the algorithm (written in C++) running real-time on the NVIDIA Jetson TK1 processor on a DJI M100 quadcopter.

Solution

The proposed solution will emulate the two-stage approach described by Teutsch et. al. [1]: (1) the application of Maximally Stable Extremal Regions (MSER) to detect hot spots and (2) the verification of the detected hot sportsusing a Discrete Cosine Transform (DCT) based descriptor and a modified Random Naive Bayes classifier.

Project Outline

Immediately after disasters such as floods in the watersheds of major rivers and affected areas of major hurricanes, the search and rescue operations are stunted by the uncertainty in the number and location of survivors. In order to resolve this uncertainty, teams of autonomous aerial robotic platforms can help localizing survivors through recent advances made in digital image processing. I propose to thoroughly examine and validate the approach discussed in [1] to contribute a possible solution. An alternative solution to the problem is presented in [2] and [3], however, I choose to first explore [1] since its approach does not require the use of visual imagery and claims to be robust to occlusion (which can be a significant challenge considering survivors could be partially submerged in water). Datasets used for the training and validation of the algorithm are provided in [3], [4], and [5].

Logistics

I am working with three other students who are not enrolled in EE 368. Their roles are in flight controls, collision avoidance, and guidance parts of the autonomous M100 platform while I am working on the human detection. I have acquired a FLIR infrared camera and captured a few video sequences of one of my teammates in the swimming pool (a still of which is shown in bottom-right of the title image).

References

[1]. http://www.cv-foundation.org/openaccess/content_cvpr_workshops_2014/W04/papers/

Teutsch_Low_Resolution_Person_2014_CVPR_paper.pdf

[2]. http://www.mva-org.jp/Proceedings/2011CD/papers/14-22.pdf

[3]. http://www.cv-foundation.org/openaccess/content_cvpr_2015/papers/

Hwang_Multispectral_Pedestrian_Detection_2015_CVPR_paper.pdf

[4]. http://projects.asl.ethz.ch/datasets/doku.php?id=ir:iricra2014

[5]. http://vcipl-okstate.org/pbvs/bench/

FOR BASE PAPER PLEASE MAIL US

DOWNLOAD SOURCE CODE CLICK HERE

OUTPUT

PROJECT PROPOSAL

Objective

The goal is to detect human survivors in infra-red and visual imagery to enable autonomous search and rescueoperations through drones.

Goals

1. Demonstrate a proof-of-concept of the algorithms in MATLAB through recorded video sequences

2. Hardware integration and validation of the algorithm (written in C++) running real-time on the NVIDIA Jetson TK1 processor on a DJI M100 quadcopter.

Solution

The proposed solution will emulate the two-stage approach described by Teutsch et. al. [1]: (1) the application of Maximally Stable Extremal Regions (MSER) to detect hot spots and (2) the verification of the detected hot sportsusing a Discrete Cosine Transform (DCT) based descriptor and a modified Random Naive Bayes classifier.

Project Outline

Immediately after disasters such as floods in the watersheds of major rivers and affected areas of major hurricanes, the search and rescue operations are stunted by the uncertainty in the number and location of survivors. In order to resolve this uncertainty, teams of autonomous aerial robotic platforms can help localizing survivors through recent advances made in digital image processing. I propose to thoroughly examine and validate the approach discussed in [1] to contribute a possible solution. An alternative solution to the problem is presented in [2] and [3], however, I choose to first explore [1] since its approach does not require the use of visual imagery and claims to be robust to occlusion (which can be a significant challenge considering survivors could be partially submerged in water). Datasets used for the training and validation of the algorithm are provided in [3], [4], and [5].

Logistics

I am working with three other students who are not enrolled in EE 368. Their roles are in flight controls, collision avoidance, and guidance parts of the autonomous M100 platform while I am working on the human detection. I have acquired a FLIR infrared camera and captured a few video sequences of one of my teammates in the swimming pool (a still of which is shown in bottom-right of the title image).

References

[1]. http://www.cv-foundation.org/openaccess/content_cvpr_workshops_2014/W04/papers/

Teutsch_Low_Resolution_Person_2014_CVPR_paper.pdf

[2]. http://www.mva-org.jp/Proceedings/2011CD/papers/14-22.pdf

[3]. http://www.cv-foundation.org/openaccess/content_cvpr_2015/papers/

Hwang_Multispectral_Pedestrian_Detection_2015_CVPR_paper.pdf

[4]. http://projects.asl.ethz.ch/datasets/doku.php?id=ir:iricra2014

[5]. http://vcipl-okstate.org/pbvs/bench/

FOR BASE PAPER PLEASE MAIL US

DOWNLOAD SOURCE CODE CLICK HERE

Comments

Post a Comment